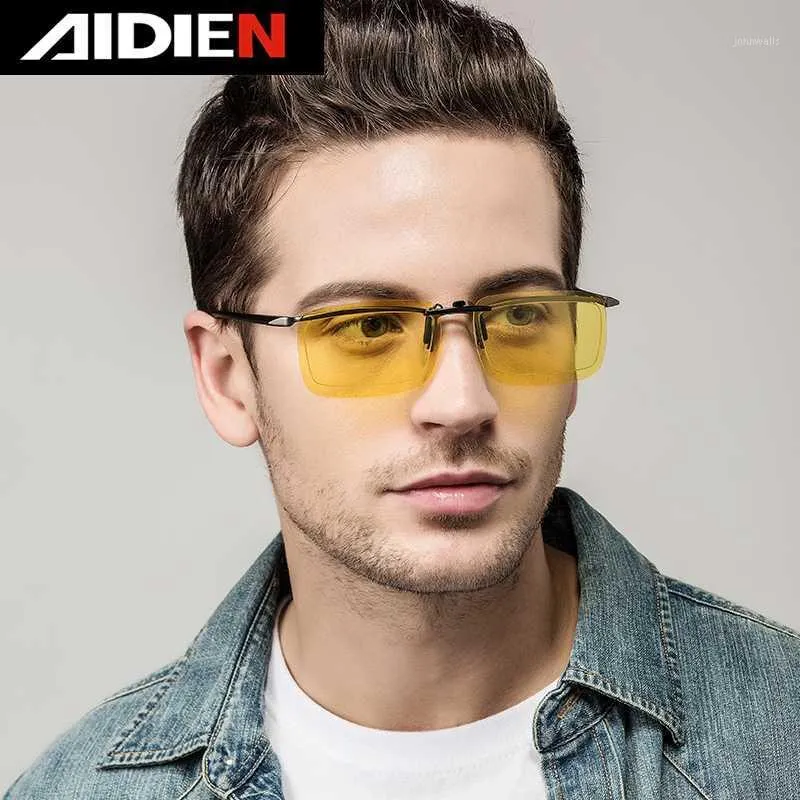

النظارات الشمسية الاستقطاب كليب على نظارات عدسة صفراء قصر النظر قرب الرجال النساء الرؤية الليلية المضادة وهج القيادة سيارة Goggle1 من 166.45ر.س | DHgate

سعر نظارات شمسية عصرية صفراء إطار صغير عدسات شفافة نظارات شمسية عتيقة شمسية إطار سداسي معدني ej فى الامارات | بواسطة امازون الامارات | كان بكام

نظارات شمسية مستطيلة الشكل للرجال من جوبين، نظارات شمسية بإطار معدني من الألمونيوم والماغنسيوم لقائد الطيارات العسكرية، حماية من الأشعة فوق البنفسجية: اشتري اون لاين بأفضل الاسعار في السعودية - سوق.كوم الان

الرجال نظارات للقيادة للرؤية الليلية سائق نظارات مكافحة وهج سبيكة إطار الاستقطاب النظارات الشمسية عدسات صفراء اللون نظارات 2023 هدية| | - AliExpress

ميار نظارات الرؤية الليلية، نظارات القيادة الليلية افياتور للرجال والنساء، نظارات رؤية صفراء مضادة للوهج للقيادة, نظارات قيادة ليلية صفراء ذهبية, M: اشتري اون لاين بأفضل الاسعار في السعودية - سوق.كوم الان

Zeontaat للرؤية الليلية سائق نظارات صفراء لين نظارات شمسية قصر النظر نظارات قيادة السيارة Uvprotection نظارات شمسية مستقطبة نظارات | Fruugo AE

نظارات شمسية من CAPONI برؤية ليلية للرجال والنساء عدسات صفراء متغيرة بشكل ذكي نظارات طيران مستقطبة طراز UV400 BSYS3109|نظارات شمسية رجالية| - AliExpress

نظارات NV NV الليلية من نيتشرز الوسائد: غير قابلة للتلف تقريبًا، مثالية لأي طقس، نظارات صفراء تحجب الوهج الليلي، تقلل إجهاد العين : Amazon.ae: ملابس وأحذية ومجوهرات

Source كبيرة الحجم المعتاد أكثر من حجم شقة الأعلى ساحة معكوسة المحيط عدسات صفراء اللون النساء الرجال الشارع المفاجئة نظارات شمس أنيقة النظارات الشمسية on m.alibaba.com

النظارات الشمسية VEITHDIA الرجال النهار ليلة الرؤية نظارات واقية المضادة للوهج نظارات للقيادة عدسة صفراء فوتوغرافية الاستقطاب النظارات الشمسية K3043: الشراء بأسعار منخفضة في متجر Joom الإلكتروني

نظارات للرجال النساء سيارات السائقين الرؤية الليلية نظارات شمس صفراء التوهج النظارات الشمسية القيادة نظارات: الشراء بأسعار منخفضة في متجر Joom الإلكتروني

.jpg)

/product/80/479444/1.jpg?0670)